3. High-level multi-threading

Face it – multi-threading programming is hard. It is hard to design a multi-threaded program, it is hard to write and test it and it is insanely hard to debug it. To ease this problem, OmniThreadLibrary introduces several pre-packaged multi-threading solutions; so-called abstractions.

The idea behind the high-level abstractions is that the user should just choose appropriate abstraction and write the worker code, while the OmniThreadLibrary provides the framework that implements the tricky multi-threaded parts, takes care of synchronisation and so on.

3.1 Introduction

High-level abstractions are implemented in the OtlParallel unit. They are all created through the factory class Parallel. High-level code intensively uses anonymous methods and generics which makes Delphi 2009 the minimum supported version. As the implementation of generics in D2009 and D2010 is not very stable, I’d recommend using at least Delphi XE.

3.1.1 A life cycle of an abstraction

Typical factory from the Parallel class returns an interface. For example, Parallel implements five Join overloads which create a Join abstraction.

1 class function Join: IOmniParallelJoin; overload;

2 class function Join(const task1, task2: TProc):

3 IOmniParallelJoin; overload;

4 class function Join(const task1, task2: TOmniJoinDelegate):

5 IOmniParallelJoin; overload;

6 class function Join(const tasks: array of TProc):

7 IOmniParallelJoin; overload;

8 class function Join(const tasks: array of TOmniJoinDelegate):

9 IOmniParallelJoin; overload;

Important fact to keep in mind is that the Join abstraction (or any other abstraction returned from any of the Parallel factories) will only be alive as long as this interface is not destroyed. For example, the following code fragment will function fine.

1 procedure Test_OK;

2 begin

3 Parallel.Join(

4 procedure

5 begin

6 Sleep(10000);

7 end,

8 procedure

9 begin

10 Sleep(15000);

11 end;

12 end

13 ).Execute;

14 end;

The interface returned from the Parallel.Join call is stored in a hidden variable which will only be destroyed while executing the end statement of the Test_OK procedure. As the Execute waits for all subtasks to complete, Join will complete its execution before the end is executed and before the interface is destroyed.

Modify the code slightly and it will not work anymore.

1 procedure Test_Fail;

2 begin

3 Parallel.Join(

4 procedure

5 begin

6 Sleep(10000);

7 end,

8 procedure

9 begin

10 Sleep(15000);

11 end;

12 end

13 ).NoWait.Execute;

14 end;

Because of the NoWait modifier, Execute will not wait for subtasks to complete but will return immediately. Next, the code behind the end will be executed and will destroy the abstraction while the subtasks are still running.

In such cases, it is important to keep the interface in a global field (typically it will be stored inside a form or another object) which will stay alive until the abstraction has stopped.

1 procedure Test_OK_Again;

2 begin

3 FJoin := Parallel.Join(

4 procedure

5 begin

6 Sleep(10000);

7 end,

8 procedure

9 begin

10 Sleep(15000);

11 end;

12 end

13 ).NoWait.Execute;

14 end;

This leads to a new problem – when should this interface be destroyed? The answer depends on the abstraction used. Some abstractions provide OnStop method which can be used for this purpose. For other abstractions, you should use termination handler of the task configuration block.

3.1.2 Anonymous methods, procedures, and methods

High-level abstractions are very much based on anonymous methods. They are used all over the OtlParallel unit and they will also be used in your own code as they provide the simplest way to interact with the high-level threading. All of delegates (pieces of code that you ‘plug in’ into the high-level infrastructure) are declared as anonymous methods.

That, however, does not force you to write anonymous methods to use high-level multi-threading. Thanks to the Delphi compiler, you can always provide a normal function/procedure or a method when an anonymous method is required.

For example, let’s look at the IOmniWorkItemConfig interface. It defines (along with other stuff) method OnExecute which accepts a delegate of type TOmniBackgroundWorkerDelegate.

1 TOmniBackgroundWorkerDelegate = reference to procedure (

2 const workItem: IOmniWorkItem);

3

4 IOmniWorkItemConfig = interface

5 function OnExecute(const aTask: TOmniBackgroundWorkerDelegate):

6 IOmniWorkItemConfig;

7 ...

8 end;

Let’s assume a variable of the appropriate type, config: IOmniWorkItemConfig. You can then call the OnExecute method using an anonymous method.

1 config.OnExecute(

2 procedure(const workItem: IOmniWorkItem)

3 begin

4 ...

5 end);

Alternatively, you could declare a ‘normal’ procedure with the same signature (with the same parameters) and pass it to the OnExecute call.

1 procedure OnConfigExecute(const workItem: IOmniWorkItem);

2 begin

3 ...

4 end;

5

6 config.OnExecute(OnConfigExecute);

The third option is to pass a method of some class to the OnExecute.

1 procedure TMyClass.OnConfigExecute(const workItem: IOmniWorkItem);

2 begin

3 ...

4 end;

5

6 procedure TMyClass.DoConfig;

7 begin

8 config.OnExecute(OnConfigExecute);

9 end;

These three options are valid whenever an anonymous method delegate can be used.

3.1.3 Pooling

Starting a thread is a relatively slow operation, which is why threads, used in the high-level abstractions are not constantly created and destroyed. Rather than that, they are allocated from a thread pool.

All high-level abstractions are using the same thread pool, GlobalParallelPool. It has public visibility – just in case you have to configure its parameters.

1 function GlobalParallelPool: IOmniThreadPool;

This behaviour can be overridden by using the task configuration block.

The following rules hold for all tasks created by the high-level abstractions:

- If a task configuration block is not specified, task is created in the

OtlParallel.GlobalParallelPoolpool. - If a task configuration block is specified:

- If

ThreadPool(aPool)is called, task is created in theaPoolpool. - If

ThreadPool(nil)is called, task is created in the defaultOtlThreadPool.GlobalOmniThreadPoolpool. - If

NoThreadPoolis called, task is not created in a pool but instead uses a newly created thread (IOW, aRunis used instead of aSchedule). - Otherwise, task is created in the

OtlParallel.GlobalParallelPoolpool (same as when a task configuration block is not specified).

- If

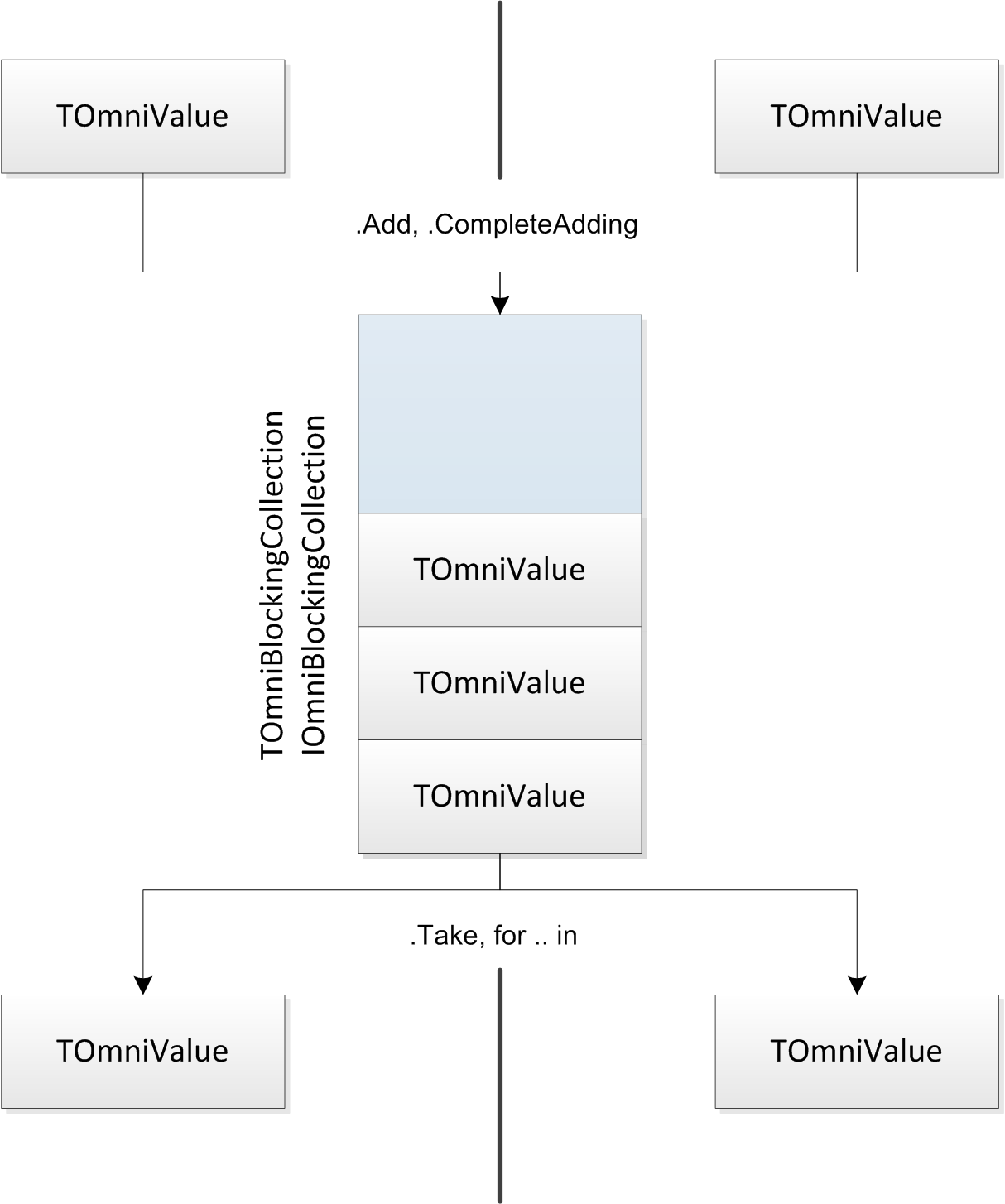

3.2 Blocking collection

The blocking collection is a Delphi clone of .NET 4 BlockingCollection. It is a thread-safe collection that provides multiple simultaneous readers and writers. This implementation of blocking collection works only with the TOmniValue elements, which is not a big limitation provided that TOmniValue can store anything, including a class, an interface and a record.

See also demo 33_BlockingCollection.

3.2.1 IOmniBlockingCollection

The blocking collecting is exposed as an interface that lives in the OtlCollections unit.

1 IOmniBlockingCollection = interface

2 procedure Add(const value: TOmniValue);

3 procedure CompleteAdding;

4 function GetEnumerator: IOmniValueEnumerator;

5 function IsCompleted: boolean;

6 function IsEmpty: boolean;

7 function IsFinalized: boolean;

8 function Next: TOmniValue;

9 procedure ReraiseExceptions(enable: boolean = true);

10 procedure SetThrottling(highWatermark, lowWatermark: integer);

11 function Take(var value: TOmniValue): boolean;

12 function TryAdd(const value: TOmniValue): boolean;

13 function TryTake(var value: TOmniValue;

14 timeout_ms: cardinal = 0): boolean;

15 property ContainerSubject: TOmniContainerSubject

16 read GetContainerSubject;

17 property Count: integer read GetApproxCount;

18 end;

There’s also a class TOmniBlockingCollection which implements this interface. This class is public and can be used in your code.

The blocking collection works in the following way:

-

Addwill add new value to the collection (which is internally implemented as a queue). -

CompleteAddingtells the collection that all data has been added. From now on, callingAddwill raise an exception. -

TryAddis the same asAddexcept that it doesn’t raise an exception but returnsFalseif the value can’t be added (ifCompleteAddingwas already called) -

IsCompletedreturnsTrueafter theCompleteAddinghas been called. -

IsEmptyreturnsTrueif collection is empty. [3.07] -

Countreturns the number of elements in the collection. [3.07] -

IsFinalizedreturnsTrueifCompleteAddinghas been called and the collection is empty. -

Nextretrieves next element from the collection (by callingTake) and returns it as the result. IfTakefails, anECollectionCompletedexception is raised. -

ReraiseExceptionsenables or disables internal exception-checking flag. Initially, this flag is disabled. When the flag is enabled andTakeorTryTakeretrieve aTOmniValueholding an exception object, this exception is immediately raised. -

SetThrottlingenables the throttling mechanism. See Throttling for more information. -

Takereads a next value from the collection. If there’s no data in the collection,Takewill block until the next value is available. If, however, any other thread callsCompleteAddingwhile theTakeis blocked,Takewill unblock and returnFalse. -

TryTakeis the same asTakeexcept that it has a timeout parameter specifying maximum time the call is allowed to wait for the next value. IfINFINITEis passed in the timeout parameter,TryTakewill block until the data is available orCompleteAddingis called. -

ContainerSubjectproperty enables the blocking collection to partake in lock-free collection observing mechanism.

Enumerator calls Take in the MoveNext method and returns the value returned from Take. Enumerator will therefore block when there is no data in the collection. The usual way to stop the enumerator is to call CompleteAdding which will unblock all pending MoveNext calls and stop enumeration.

3.2.2 Bulk import and export

The TOmniBlockingCollection class implements a set of functions for fast import of large quantity of data, and for simple extraction of all data into an array.

1 class function FromArray<T>(const values: TArray<T>):

2 IOmniBlockingCollection; inline;

3 class function FromRange<T>(const values: array of T):

4 IOmniBlockingCollection; overload;

5 class function FromRange<T>(const collection: IEnumerable<T>):

6 IOmniBlockingCollection; overload; inline;

7 class function FromRange<T>(const collection: TEnumerable<T>):

8 IOmniBlockingCollection; overload; inline;

9 class function ToArray<T>(const coll: IOmniBlockingCollection): TArray<T>;

10 procedure AddRange<T>(const values: array of T); overload;

11 procedure AddRange<T>(const collection: IEnumerable<T>); overload;

12 procedure AddRange<T>(const collection: TEnumerable<T>); overload;

The three FromRange<T> overloads [3.07.6] create a new IOmniBlockingCollection and populate its content from an array or a collection.

The FromArray<T> function [3.07.6] is an alias for the FromRange<T> function accepting an array.

The three `AddRange<T>’ functions [3.07.6] keep the current content of the blocking collection intact and add new data to the collection.

The ToArray<T> function returns entire content of the blocking collection repacked into a dynamic array. Returned data is removed from the blocking collection.

Demo 61_CollectionToArray shows how to use ToArray<T>.

Demo 67_ArrayToCollection shows how to use FromArray<T> and FromRange<T>.

3.2.3 Throttling

Normally, a blocking collection can grow without limits and can fill up the available memory. If the algorithm doesn’t prevent this intrinsically, it is sometimes useful to set up throttling, a mechanism which blocks additions when the blocking collection size reaches some predetermined value (high watermark) and which allows additions again when the size reaches another predetermined value (low watermark).

1 procedure SetThrottling(highWatermark, lowWatermark: integer);

The behaviour of Add, TryAdd, Take and TryTake is modified if the throttling is used.

When Add or TryAdd is called and the number of elements in the blocking collection equals highWatermark, the code blocks. It will only continue if the number of elements in the collection falls below the lowWatermark or if the CompleteAdding is called.

When Take or TryTake take an element from the collection and adding is temporarily blocked because of the throttling and the new number of elements in the collection is now below the lowWatermark, all waiting Add and TryAdd calls will be unblocked.

3.3 Task configuration

High-level abstractions will do most of the hard work for you, but sometimes you’ll still have to apply some configuration to the low-level parallel tasks (entities represented with the IOmniTask interface). The mechanism for doing low-level configuration is called task configuration block.

Task configuration block, or IOmniTaskConfig, is an interface returned from the Parallel.TaskConfig factory function.

1 class function Parallel.TaskConfig: IOmniTaskConfig;

2 begin

3 Result := TOmniTaskConfig.Create;

4 end;

This interface contains various functions that set up messaging handlers, termination handlers and so on. All function return interface itself so they can be used in a fluent manner.

1 IOmniTaskConfig = interface

2 procedure Apply(const task: IOmniTaskControl);

3 function CancelWith(

4 const token: IOmniCancellationToken): IOmniTaskConfig;

5 function MonitorWith(

6 const monitor: IOmniTaskControlMonitor): IOmniTaskConfig;

7 function NoThreadPool: IOmniTaskConfig;

8 function OnMessage(

9 eventDispatcher: TObject): IOmniTaskConfig; overload;

10 function OnMessage(

11 eventHandler: TOmniTaskMessageEvent): IOmniTaskConfig; overload;

12 function OnMessage(

13 msgID: word;

14 eventHandler: TOmniTaskMessageEvent): IOmniTaskConfig; overload;

15 function OnMessage(

16 msgID: word;

17 eventHandler: TOmniOnMessageFunction): IOmniTaskConfig; overload;

18 function OnTerminated(

19 eventHandler: TOmniTaskTerminatedEvent): IOmniTaskConfig; overload;

20 function OnTerminated(

21 eventHandler: TOmniOnTerminatedFunction): IOmniTaskConfig; overload;

22 function OnTerminated(

23 eventHandler: TOmniOnTerminatedFunctionSimple): IOmniTaskConfig;

24 overload;

25 function SetPriority(threadPriority: TOTLThreadPriority): IOmniTaskConfig;

26 function ThreadPool(const threadPool: IOmniThreadPool): IOmniTaskConfig;

27 function WithCounter(

28 const counter: IOmniCounter): IOmniTaskConfig;

29 function WithLock(

30 const lock: TSynchroObject;

31 autoDestroyLock: boolean = true): IOmniTaskConfig; overload;

32 function WithLock(

33 const lock: IOmniCriticalSection): IOmniTaskConfig; overload;

34 end;

-

Applyapplies task configuration block to a task. It is used internally in the OtlParallel unit. -

CancelWithshares cancellation token with the task. -

MonitorWithattaches task to the monitor.

-

NoThreadPoolinstructs the OtlParallel architecture to create a new thread to execute worker task instead of running task in a thread pool. [3.07.2] -

OnMessagefunctions set up message dispatch to an object (for example, a form) or to an event handler. In the latter case, event handler can be set for a specific message ID or for all messages. -

OnTerminatedsets up termination handler which is called when the task terminates. -

SetPriorityspecifies the priority for worker tasks. Default priority normal will be used if this method is not called. -

ThreadPoolspecifies the thread pool used for executing worker tasks. Default thread poolGlobalParallelPool(singleton, defined in the OtlParallel unit) is used for executing worker tasks if this method is not called. If anilis passsed as an argument, default OmniThreadLibrary poolOtlThreadPool.GlobalOmniThreadPoolwill be used. -

WithCountershares a counter with the task. -

WithLockshares a lock object with the task.

Examples of task configuration block usage are demonstrated in demo 47_TaskConfig. Some examples are also given in sections describing individual abstractions.

3.4 Async

Async is the simplest of high-level abstractions and is typically used for fire and forget scenarios. To create an Async task, call Parallel.Async.

See also demo 46_Async.

Example:

1 Parallel.Async(

2 procedure

3 begin

4 MessageBeep($FFFFFFFF);

5 end);

This simple program creates a background task with a sole purpose to make some noise. The task is coded as an anonymous method but you can also use a normal method or a normal procedure for the task code.

The Parallel class defines two Async overloads. The first accepts a parameter-less background task and an optional task configuration block and the second accepts a background task with an IOmniTask parameter and an optional task configuration block.

1 type

2 TOmniTaskDelegate = reference to procedure(const task: IOmniTask);

3

4 Parallel = class

5 class procedure Async(task: TProc;

6 taskConfig: IOmniTaskConfig = nil); overload;

7 class procedure Async(task: TOmniTaskDelegate;

8 taskConfig: IOmniTaskConfig = nil); overload;

9 ...

10 end;

The second form is useful if the background code needs access to the IOmniTask interface, for example, to send messages to the owner or to execute code in the owner thread (typically that will be the main thread).

The example below uses Async task to fetch the contents of a web page (by calling a mysterious function HttpGet) and then uses Invoke to execute a code that logs the length of the result in the main thread.

1 Parallel.Async(

2 procedure (const task: IOmniTask)

3 var

4 page: string;

5 begin

6 HttpGet('otl.17slon.com', 80, 'tutorials.htm', page, '');

7 task.Invoke(

8 procedure

9 begin

10 lbLogAsync.Items.Add(Format('Async GET: %d ms; page length = %d',

11 [time, Length(page)]))

12 end);

13 end);

The same result could be achieved by sending a message from the background thread to the main thread. In the example below, TaskConfig block is used to configure message handler.

1 const

2 WM_RESULT = WM_USER;

3

4 procedure LogResult(const task: IOmniTaskControl; const msg: TOmniMessage);

5 begin

6 lbLogAsync.Items.Add(Format('Async GET: %d ms; page length = %d',

7 [time, Length(page)]))

8 end;

9

10 Parallel.Async(

11 procedure (const task: IOmniTask)

12 var

13 page: string;

14 begin

15 HttpGet('otl.17slon.com', 80, 'tutorials.htm', page, '');

16 task.Comm.Send(WM_RESULT, page);

17 end,

18 TaskConfig.OnMessage(WM_RESULT, LogResult)

19 );

Let me warn you that in cases where you want to return a result from a background task, Async abstraction is not the most appropriate. You would be better off using a Future.

3.4.1 Handling exceptions

If the background code raises an unhandled exception, OmniThreadLibrary catches this exception and re-raises it in the OnTerminated handler. This way the exception travels from the background thread to the owner thread where it can be processed.

As the OnTerminated handler executes at an unspecified moment when Windows are processing window messages, there is no good way to catch this message with a try..except block. The caller must install its own OnTerminated handler instead and handle exception there.

The following example uses OnTerminated handler to detach fatal exception from the task, log the exception details and destroy the exception object.

1 Parallel.Async(

2 procedure

3 begin

4 Sleep(1000);

5 raise Exception.Create('Exception in Async');

6 end,

7 Parallel.TaskConfig.OnTerminated(

8 procedure (const task: IOmniTaskControl)

9 var

10 excp: Exception;

11 begin

12 if assigned(task.FatalException) then begin

13 excp := task.DetachException;

14 Log('Caught async exception %s:%s',[excp.ClassName, excp.Message]);

15 FreeAndNil(excp);

16 end;

17 end

18 ));

If you don’t install an OnTerminated handler, an exception will be handled by the application-level filter, which will by default cause a message box to appear.

See also demo 48_OtlParallelExceptions.

3.5 Async/Await

Async/Await is a simplified version of the Async abstraction which mimics the .NET Async/Await mechanism.4

Async/Await accepts two parameter less anonymous methods. The first one is executed in a background thread and the second one is executed in the main thread after the background thread has completed its work.

See also demo 53_AsyncAwait.

Using Async/Await you can, for example, create a background operation which is triggered by a click and which re-enables button after the background job has been completed.

1 procedure TForm1.Button1Click(Sender: TObject);

2 var

3 button: TButton;

4 begin

5 button := Sender as TButton;

6 button.Caption := 'Working ...';

7 button.Enabled := false;

8 Async(

9 // executed in a background thread

10 procedure begin

11 Sleep(5000);

12 end).

13 Await(

14 // executed in the main thread after

15 // the anonymous method passed to

16 // Async has completed its work

17 procedure begin

18 button.Enabled := true;

19 button.Caption := 'Done!';

20 end);

21 end;

Exceptions in the Async part are currently not handled by the OmniThreadLibrary.

3.6 Future

A Future is a background calculation that returns a result. To create the abstraction, call Parallel.Future<T> (where T is the type returned from the calculation). This will return a result of type IOmniFuture<T>, which is the interface you will use to work with the background task.

To get the result of the calculation, call the .Value method on the interface returned from the Parallel.Future call.

See also demo 39_Future.

Example:

1 var

2 FCalculation: IOmniFuture<integer>;

3

4 procedure StartCalculation;

5 begin

6 FCalculation := Parallel.Future<integer>(

7 function: integer

8 var

9 i: integer;

10 begin

11 Result := 0;

12 for i := 1 to 100000 do

13 Result := Result + i;

14 end

15 );

16 end;

17

18 function GetResult: integer;

19 begin

20 Result := FCalculation.Value;

21 FCalculation := nil;

22 end;

The Parallel class implements two Future<T> overloads. The first accepts a parameter-less background task and an optional task configuration block and the second accepts a background task with an IOmniTask parameter and an optional task configuration block.

1 type

2 TOmniFutureDelegate<T> = reference to function: T;

3 TOmniFutureDelegateEx<T> =

4 reference to function(const task: IOmniTask): T;

5

6 Parallel = class

7 class function Future<T>(action: TOmniFutureDelegate<T>;

8 taskConfig: IOmniTaskConfig = nil): IOmniFuture<T>; overload;

9 class function Future<T>(action: TOmniFutureDelegateEx<T>;

10 taskConfig: IOmniTaskConfig = nil): IOmniFuture<T>; overload;

11 ...

12 end;

The second form is useful if the background code needs access to the IOmniTask interface, for example, to send messages to the owner or to execute code in the owner thread (typically that will be the main thread). See the Async section for an example.

A Future task always wraps a function of some type. In the example above the function added numbers from 1 to 100000 together and returned an integer result. That’s why the Future task was created by calling Parallel.Future<integer> and why the result – the interface that provides a way to manage the task – is declared as IOmniFuture<integer>. But a Future could equally well return a result of any type – a string or a date/time or even a record, class or interface.

The following example is a rewrite of the Async example. It uses the same mysterious HttpGet function, but it is wrapped in a more flexible way. Function StartHttpGet accepts an url parameter specifying which page to retrieve from the web server. It then creates a future returning a string and passes it a simple code to execute – a one-liner anonymous function that only calls the already known HttpGet.

This example illustrates two important points:

- Anonymous methods are great for capturing variables. If you look at the

StartHttpGetagain, you’ll see that the url parameter is used from two different threads.StartHttpGetis called in the main thread and the anonymous code is executed in the background thread. Still, the parameter somehow makes it across this thread boundary – and that is done by the anonymous method magic. - Don’t write long and complicated anonymous methods – it is better to call an external method from the anonymous code and make the long and complicated calculation in that external method (or in a code called from that method etc).

1 var

2 FGetFuture: IOmniFuture<string>;

3

4 function HttpGet(const url: string): string;

5 begin

6 // this function fetches a page from the web server

7 // and returns its contents

8 end;

9

10 procedure StartHttpGet(const url: string);

11 begin

12 FGetFuture := Parallel.Future<string>(

13 function: string

14 begin

15 Result := HttpGet(url);

16 end

17 );

18 end;

19

20 function GetResult: string;

21 begin

22 Result := FGetFuture.Value;

23 FGetFuture := nil;

24 end;

3.6.1 IOmniFuture<T> interface

The IOmniFuture<T> interface implements other methods besides the Value.

1 type

2 IOmniFuture<T> = interface

3 procedure Cancel;

4 function DetachException: Exception;

5 function FatalException: Exception;

6 function IsCancelled: boolean;

7 function IsDone: boolean;

8 function TryValue(timeout_ms: cardinal; var value: T): boolean;

9 function Value: T;

10 function WaitFor(timeout_ms: cardinal): boolean;

11 end;

The interface implements exception-handling functions, cancellation support and functions that check if the background calculation has completed.

3.6.2 Completion detection

When you call the Value function, you don’t know ahead what will happen. If the background code has already calculated the result, the Value call will return immediately. Otherwise, the caller thread will be blocked until the result is available and if you are executing a long calculation (or if the web or database connection did not succeed and is now waiting for a timeout to occur) this may last awhile. If you created the future in the main thread, then your whole application will be blocked until Value returns.

There are few ways around this problem. One is to periodically call the IsDone function. It will return False while the background calculation is still working and True once the result is available. Another option is to call WaitFor with some (small) timeout. WaitFor will wait specified number of milliseconds and will return True if result is available. The third way to achieve the same is to call TryValue periodically. TryValue also waits some specified number of milliseconds and returns True if result is available but in addition it will also return the result in the value parameter.

The fourth and completely different way is to specify a termination handler which will notify you when the background calculation is completed. The following example sets the termination handler to get the value of the background calculation into the memo field and then destroy the Future interface.

1 procedure StartHttpGet(const url: string);

2 begin

3 FGetFuture := Parallel.Future<string>(

4 function: string

5 begin

6 Result := HttpGet(url);

7 end,

8 Parallel.TaskConfig.OnTerminated(

9 procedure

10 begin

11 Memo1.Text := FGetFuture.Value;

12 FGetFuture := nil;

13 end

14 )

15 );

16 end;

3.6.3 Cancellation

It is possible to cancel the background execution of the Future before it is completed. The Future uses Cancellation token mechanism to achieve this. Cancellation is cooperative – if the background task does not willingly cancel itself, cancellation will fail.

To cancel a background task, the Future owner (the code that called Parallel.Future) has to call the Cancel method on the IOmniFuture<T> interface. This will signal the cancellation token which the background task must check periodically. To get access to the cancellation token, background code must be declared as a function accepting an IOmniTask parameter.

The following (pretty much pointless) program illustrates this concept.

1 var

2 FCountFuture: IOmniFuture<integer>;

3

4 function CountTo100(const task: IOmniTask): integer;

5 var

6 i: integer;

7 begin

8 for i := 1 to 100 do begin

9 Sleep(100);

10 Result := i;

11 if task.CancellationToken.IsSignalled then

12 break; //for

13 end;

14 end;

15

16 procedure StartCounting;

17 begin

18 FCountFuture := Parallel.Future<integer>(CountTo100);

19 Sleep(100);

20 FCountFuture.Cancel;

21 FCountFuture.WaitFor(INFINITE);

22 FCountFuture := nil;

23 end;

StartCounting creates a Future which executes CountTo100 function in the background. It then sleeps 100 milliseconds, calls the Cancel function, waits for the Future to terminate and clears the Future interface.

CountTo100 function counts from 1 to 100. It sleeps for 100 milliseconds after each number, stores the current counter in the function result and then checks the cancellation token. If it is signaled (meaning that the owner called the Cancel function), it will break out of the for loop.

If you put a breakpoint on the last line of the StartCounting function and run the program, you’ll see that it will be reached almost immediately, proving that the CountTo100 did not take 10 seconds to return a result (100 repeats * 100 milliseconds = 10 seconds).

You cannot call the Value function if the calculation was cancelled as it would raise an EFutureCancelled exception. If you don’t know whether the Cancel was called or not, you can call the IOmniFuture<T>.IsCancelled and check the result (True = calculation was cancelled).

3.6.4 Handling exceptions

If the background code raises an unhandled exception (i.e. the exception was not captured in a try..except block), OmniThreadLibrary catches this exception and gracefully completes the background task. When you call the Value function, this exception is re-raised.

This immensely helps with debugging as the background exceptions (exceptions in background threads) are ignored when you run the program without a debugger. By default Delphi does nothing with them – it behaves as if nothing is wrong and that can be quite dangerous. As the Future exceptions are re-raised in the main thread when the Value is called this makes them equivalent to other exceptions in the main thread.

There are few different ways to handle exceptions in Future and they are easiest explained through the code. First example catches the exception by wrapping the Value call in try..except.

1 procedure FutureException1;

2 var

3 future: IOmniFuture<integer>;

4 begin

5 future := Parallel.Future<integer>(

6 function: integer

7 begin

8 raise ETestException.Create('Exception in Parallel.Future');

9 end

10 );

11 Log('Future is executing ...');

12 Sleep(1000);

13 try

14 Log('Future retured: %d', [future.Value]);

15 except

16 on E: Exception do

17 Log('Future raised exception %s:%s', [E.ClassName, E.Message]);

18 end;

19 end;

Second example uses WaitFor to wait on task completion and then checks the result of the FatalException function. It will return Nil if there was no exception or the exception object if there was an exception. Exception object itself will still be owned by the Future task and will be destroyed when the Future is destroyed.

1 procedure FutureException2;

2 var

3 future: IOmniFuture<integer>;

4 begin

5 future := Parallel.Future<integer>(

6 function: integer

7 begin

8 raise ETestException.Create('Exception in Parallel.Future');

9 end

10 );

11 Log('Future is executing ...');

12 future.WaitFor(INFINITE);

13 if assigned(future.FatalException) then

14 Log('Future raised exception %s:%s',

15 [future.FatalException.ClassName, future.FatalException.Message])

16 else

17 Log('Future retured: %d', [future.Value]);

18 end;

Third example shows how you can detach exception from the future. By calling DetachException you will get the ownership of the exception object and you should destroy it at some appropriate point in time.

1 procedure FutureException3;

2 var

3 excFuture: Exception;

4 future : IOmniFuture<integer>;

5 begin

6 future := Parallel.Future<integer>(

7 function: integer

8 begin

9 raise ETestException.Create('Exception in Parallel.Future');

10 end

11 );

12 Log('Future is executing ...');

13 future.WaitFor(INFINITE);

14 excFuture := future.DetachException;

15 try

16 if assigned(excFuture) then

17 Log('Future raised exception %s:%s',

18 [excFuture.ClassName, excFuture.Message])

19 else

20 Log('Future retured: %d', [future.Value]);

21 finally FreeAndNil(excFuture); end;

22 end;

See also demo

48_OtlParallelExceptions.

3.6.5 Examples

Practical examples of Future usage can be found in chapter OmniThreadLibrary and COM/OLE.

3.7 Join

Join abstraction enables you to start multiple background tasks in multiple threads. To create the task, call Parallel.Join.

See also demo 37_ParallelJoin.

Example:

1 Parallel.Join(

2 procedure

3 var

4 i: integer;

5 begin

6 for i := 1 to 8 do begin

7 Sleep(200);

8 MessageBeep($FFFFFFFF);

9 end,

10 procedure

11 var

12 i: integer;

13 begin

14 for i := 1 to 10 do begin

15 Sleep(160);

16 MessageBeep($FFFFFFFF);

17 end;

18 end

19 ).Execute;

This simple program executes two background tasks, each beeping at a different frequency. Each task is coded as an anonymous method but you can also use a normal method or a normal procedure for the task code.

The Parallel class defines five Join overloads. The first creates empty IOmniParallelJoin interface. Next two create the same interface but configured with two tasks and the last two create this interface configured for any number of tasks. Tasks can be of two different types – parameterless methods and methods containing one parameter of the IOmniJoinState type.

1 type

2 TOmniJoinDelegate = reference to procedure (const joinState:

3 IOmniJoinState);

4

5 Parallel = class

6 class function Join: IOmniParallelJoin; overload;

7 class function Join(const task1, task2: TProc): IOmniParallelJoin;

8 overload;

9 class function Join(const task1, task2: TOmniJoinDelegate):

10 IOmniParallelJoin; overload;

11 class function Join(const tasks: array of TProc): IOmniParallelJoin;

12 overload;

13 class function Join(const tasks: array of TOmniJoinDelegate):

14 IOmniParallelJoin; overload;

15 ...

16 end;

3.7.1 IOmniParallelJoin interface

Parallel.Join returns an IOmniParallelJoin interface which you can use to specify tasks, start and control execution and handle exceptions.

1 type

2 IOmniParallelJoin = interface

3 function Cancel: IOmniParallelJoin;

4 function DetachException: Exception;

5 function Execute: IOmniParallelJoin;

6 function FatalException: Exception;

7 function IsCancelled: boolean;

8 function IsExceptional: boolean;

9 function NumTasks(numTasks: integer): IOmniParallelJoin;

10 function OnStop(const stopCode: TProc): IOmniParallelJoin; overload;

11 function OnStop(const stopCode: TOmniTaskStopDelegate): IOmniParallelJoin; overload;

12 function OnStopInvoke(const stopCode: TProc): IOmniParallelJoin;

13 function Task(const task: TProc): IOmniParallelJoin; overload;

14 function Task(const task: TOmniJoinDelegate): IOmniParallelJoin; overload;

15 function TaskConfig(const config: IOmniTaskConfig): IOmniParallelJoin;

16 function NoWait: IOmniParallelJoin;

17 function WaitFor(timeout_ms: cardinal): boolean;

18 end;

The most important of these functions is Execute. It will start an appropriate number of background threads and start executing tasks in those threads. By default, Join uses as many threads as there are tasks but you can override this behaviour by calling the NumTasks function.

If NumTasks receives a positive parameter (> 0), the number of worker tasks is set to that number. For example, NumTasks(16) starts 16 worker tasks, even if that is more than the number of available cores.

If NumTasks receives a negative parameter (< 0), it specifies the number of cores that should be reserved for other use. The number of worker tasks is then set to <number of available cores> - <number of reserved cores>. If, for example, current process can use 16 cores and NumTasks(-4) is used, only 12 (916-4*) worker tasks will be started.

Value 0 is not allowed and results in an exception.

You can add tasks to a Join abstractions by calling the Task function. In fact, that’s just how the Parallel.Join overloads are implemented.

1 class function Parallel.Join(const task1, task2: TProc): IOmniParallelJoin;

2 begin

3 Result := TOmniParallelJoin.Create.Task(task1).Task(task2);

4 end;

5

6 class function Parallel.Join(const tasks: array of TProc): IOmniParallelJoin;

7 var

8 aTask: TProc;

9 begin

10 Result := TOmniParallelJoin.Create;

11 for aTask in tasks do

12 Result.Task(aTask);

13 end;

To set up task configuration block, call the TaskConfig function. Following example uses TaskConfig to set up critical section which is then used in two parallel tasks to protect the shared resource. Workers use the IOmniJoinState instance to access the IOmniTask interface and through it the Lock property.

1 FSharedValue := 42;

2 Parallel.Join(

3 procedure (const joinState: IOmniJoinState)

4 var

5 i: integer;

6 begin

7 for i := 1 to 1000000 do begin

8 joinState.Task.Lock.Acquire;

9 FSharedValue := FSharedValue + 17;

10 joinState.Task.Lock.Release;

11 end;

12 end,

13 procedure (const joinState: IOmniJoinState)

14 var

15 i: integer;

16 begin

17 for i := 1 to 1000000 do begin

18 joinState.Task.Lock.Acquire;

19 FSharedValue := FSharedValue - 17;

20 joinState.Task.Lock.Release;

21 end;

22 end

23 ).TaskConfig(Parallel.TaskConfig.WithLock(CreateOmniCriticalSection))

24 .Execute;

By default, Join will wait for all background tasks to complete execution. Alternatively, you can call the NoWait function, after which Join will just start the tasks and return immediately. If you want to be notified when all tasks are finished, you can assign the termination handler by calling the OnStop function. This termination handler is called from one of the worker threads, not from the main thread! If you need to run a code in the main thread, use the task configuration block.

Release [3.07.2] introduced method OnStopInvoke which works like OnStop except that the termination handler is automatically executed in the context of the owner thread via implicit Invoke. For example, see Parallel.ForEach.OnStopInvoke.

You can also call WaitFor to wait for the Join to finish. WaitFor accepts an optional timeout parameter; by default it will wait as long as needed.

3.7.2 IOmniJoinState interface

Task method can accept a parameter of type IOmniJoinState. This allows it to access the IOmniTask interface, participate in the cooperative cancellation and check for exceptions.

1 type

2 IOmniJoinState = interface

3 function GetTask: IOmniTask;

4 //

5 procedure Cancel;

6 function IsCancelled: boolean;

7 function IsExceptional: boolean;

8 property Task: IOmniTask read GetTask;

9 end;

3.7.3 Cancellation

Join background tasks support cooperative cancellation. If you are using TOmniJoinDelegate tasks (that is, tasks accepting the IOmniJoinState parameter), any task can call the Cancel method of this interface. This, in turn, sets internal cancellation flag which may be queried by calling the IsCancelled method. That way, one task can interrupt other tasks provided that they are testing IsCancelled repeatedly.

Main thread can also cancel its subtasks (when using NoWait) by calling IOmniParallelJoin.Cancel and can test the cancellation flag by calling IsCancelled.

The following demo code shows most of the concepts mentioned above.

1 var

2 join: IOmniParallelJoin;

3 time: int64;

4 begin

5 FJoinCount.Value := 0;

6 FJoinCount2.Value := 0;

7 join := Parallel.Join(

8 procedure (const joinState: IOmniJoinState)

9 var

10 i: integer;

11 begin

12 for i := 1 to 10 do begin

13 Sleep(100);

14 FJoinCount.Increment;

15 if joinState.IsCancelled then

16 break; //for

17 end;

18 end,

19 procedure (const joinState: IOmniJoinState)

20 var

21 i: integer;

22 begin

23 for i := 1 to 10 do begin

24 Sleep(200);

25 FJoinCount2.Increment;

26 if joinState.IsCancelled then

27 break; //for

28 end;

29 end

30 ).NoWait.Execute;

31 Sleep(500);

32 time := DSiTimeGetTime64;

33 join.Cancel.WaitFor(INFINITE);

34 Log(Format('Waited %d ms for joins to terminate',

35 [DSiElapsedTime64(time)]));

36 Log(Format('Tasks counted up to %d and %d',

37 [FJoinCount.Value, FJoinCount2.Value]));

38 end;

The call to Parallel.Join starts two tasks. Because the NoWait is used, the call returns immediately and stores resulting IOmniParallelJoin interface in the local variable join. Main code then sleeps for half a second, cancels the execution and waits for background tasks to terminate.

Both tasks execute a simple loop which waits a little, increments a counter and checks the cancellation flag. Because the cancellation flag is set after 500 ms, we would expect five or six repetitions of the first loop (five repetitions take exactly 500 ms and we can’t tell exactly what will execute first – Cancel or fifth IsCancelled) and three repetitions of the second loop. That is exactly what the program prints out.

3.7.4 Handling exceptions

Exceptions in background tasks are caught and re-raised in the WaitFor method. If you are using a synchronous version of Join (without the NoWait modifier), then WaitFor is called at the end of the Execute method (in other words, Parallel.Join(…).Execute will re-raise task exceptions). If, however, you are using the asynchronous version (by calling Parallel.Join(…).NoWait.Execute), exception will only be raised when you wait for the background tasks to complete by calling WaitFor.

You can test for the exception by calling the FatalException function. It will first wait for all background tasks to complete (without raising the exception) and then return the exception object. You can also detach the exception object from the Join and handle it yourself by using the DetachException function.

There’s also an IsExceptional function (available in IOmniParallelJoin and IOmniJoinState interfaces) which tells you whether any background task has thrown an exception.

As Join executes multiple tasks, there can be multiple background exceptions. To get you full access to those exceptions, Join wraps them into EJoinException object.

1 type

2 EJoinException = class(Exception)

3 constructor Create; reintroduce;

4 destructor Destroy; override;

5 procedure Add(iTask: integer; taskException: Exception);

6 function Count: integer;

7 property Inner[idxException: integer]: TJoinInnerException

8 read GetInner; default;

9 end;

This exception class contains combined error messages from all background tasks in its Message property and allows you to access exception information for all caught exceptions directly with the Inner[] property. The following code demonstrates this.

1 var

2 iInnerExc: integer;

3 begin

4 try

5 Parallel.Join([

6 procedure begin

7 raise ETestException.Create('Exception 1 in Parallel.Join');

8 end,

9 procedure begin

10 end,

11 procedure begin

12 raise ETestException.Create('Exception 2 in Parallel.Join');

13 end]).Execute;

14 except

15 on E: EJoinException do begin

16 Log('Join raised exception %s:%s', [E.ClassName, E.Message]);

17 for iInnerExc := 0 to E.Count - 1 do

18 Log(' Task #%d raised exception: %s:%s', [E[iInnerExc].TaskNumber,

19 E[iInnerExc].FatalException.ClassName,

20 E[iInnerExc].FatalException.Message]);

21 end;

22 end;

23 end;

The iInnerExc variable loops over all caught exceptions and for each such exception displays the task number (starting with 0), exception class and exception message. This approach allows you to log the exception or, if you are interested in details, examine specific inner exceptions and handle them appropriately.

See also demo 48_OtlParallelExceptions.

3.8 Parallel task

Parallel task abstraction enables you to start the same method in multiple threads. To create the task, call Parallel.ParallelTask.

Example:

1 Parallel.ParallelTask.NumTasks(3).Execute(

2 procedure

3 begin

4 while true do

5 ;

6 end

7 );

This simple code fragment starts infinite loop in three threads. The task is coded as an anonymous method but you can also use a normal method or a normal procedure for the task code.

The Parallel class implements function ParallelTask which returns an IOmniParallelTask interface. All configuration of the parallel task is done via this interface.

1 type

2 Parallel = class

3 class function ParallelTask: IOmniParallelTask;

4 ...

5 end;

3.8.1 IOmniParallelTask interface

1 type

2 IOmniParallelTask = interface

3 function Execute(const aTask: TProc): IOmniParallelTask; overload;

4 function Execute(

5 const aTask: TOmniParallelTaskDelegate): IOmniParallelTask; overload;

6 function NoWait: IOmniParallelTask;

7 function NumTasks(numTasks: integer): IOmniParallelTask;

8 function OnStop(const stopCode: TProc): IOmniParallelTask; overload;

9 function OnStop(const stopCode: TOmniTaskStopDelegate): IOmniParallelTask; overload;

10 function OnStopInvoke(const stopCode: TProc): IOmniParallelTask;

11 function TaskConfig(const config: IOmniTaskConfig): IOmniParallelTask;

12 function WaitFor(timeout_ms: cardinal): boolean;

13 end;

The most important of these functions is Execute. It will start an appropriate number of background threads and start executing task in those threads.

There are two overloaded versions of Execute. The first accepts a parameter-less background task, and the second accepts a background task with an IOmniTask parameter.

By default, Parallel task uses as many threads as there are tasks but you can override this behaviour by calling the NumTasks function.

If NumTasks receives a positive parameter (> 0), the number of worker tasks is set to that number. For example, NumTasks(16) starts 16 worker tasks, even if that is more than the number of available cores.

If NumTasks receives a negative parameter (< 0), it specifies the number of cores that should be reserved for other use. The number of worker tasks is then set to <number of available cores> - <number of reserved cores>. If, for example, current process can use 16 cores and NumTasks(-4) is used, only 12 (16-4) worker tasks will be started.

Parameter 0 is not allowed and results in an exception.

The OnStop function can be used to set up a termination handler - a code that will get executed when all background tasks will have completed execution. This termination handler is called from one of the worker threads, not from the main thread! If you need to run a code in the main thread, use the task configuration block. To set up task configuration block, call the TaskConfig function.

Release [3.07.2] introduced method OnStopInvoke which works like OnStop except that the termination handler is automatically executed in the context of the owner thread via implicit Invoke. For example, see Parallel.ForEach.OnStopInvoke.

A call to the WaitFor function will wait for up to timeout_ms milliseconds (this value can be set to INFINITE) for all background tasks to terminate. If the tasks terminate in the specified time, WaitFor will return True. Otherwise, it will return False.

3.8.2 Example

The following code uses ParallelTask to generate large quantities of pseudo-random data. The data is written to an output stream.

1 procedure CreateRandomFile(fileSize: integer; output: TStream);

2 const

3 CBlockSize = 1 * 1024 * 1024 {1 MB};

4 var

5 buffer : TOmniValue;

6 memStr : TMemoryStream;

7 outQueue : IOmniBlockingCollection;

8 unwritten: IOmniCounter;

9 begin

10 outQueue := TOmniBlockingCollection.Create;

11 unwritten := CreateCounter(fileSize);

12 Parallel.ParallelTask.NoWait

13 .NumTasks(Environment.Process.Affinity.Count)

14 .OnStop(Parallel.CompleteQueue(outQueue))

15 .Execute(

16 procedure

17 var

18 buffer : TMemoryStream;

19 bytesToWrite: integer;

20 randomGen : TGpRandom;

21 begin

22 randomGen := TGpRandom.Create;

23 try

24 while unwritten.Take(CBlockSize, bytesToWrite) do begin

25 buffer := TMemoryStream.Create;

26 buffer.Size := bytesToWrite;

27 FillBuffer(buffer.Memory, bytesToWrite, randomGen);

28 outQueue.Add(buffer);

29 end;

30 finally FreeAndNil(randomGen); end;

31 end

32 );

33 for buffer in outQueue do begin

34 memStr := buffer.AsObject as TMemoryStream;

35 output.CopyFrom(memStr, 0);

36 FreeAndNil(memStr);

37 end;

38 end;

The code creates a blocking collection to hold buffers with pseudo-random data. Then it creates a counter which will hold the count of bytes that have yet to be written.

ParallelTask is used to start parallel workers. Each worker initializes its own pseudo-random data generator and then keeps generating buffers with pseudo-random data until the counter drops to zero. Each buffer is written to the blocking collection.

Because of the NoWait modifier, main thread continues with the execution immediately after all threads have been scheduled. Main thread keeps reading buffers from the blocking collection and writes the content of those buffers into the output stream. (As the TStream is not thread-safe, we cannot write to the output stream directly from multiple background threads.)

For..in loop will block if there is no data in the blocking collection, but it will only stop looping after the blocking collection’s CompleteAdding method is called. This is done with the help of the Parallel.CompleteQueue helper which is called from the termination handler (OnStop).

1 class function Parallel.CompleteQueue(

2 const queue: IOmniBlockingCollection): TProc;

3 begin

4 Result :=

5 procedure

6 begin

7 queue.CompleteAdding;

8 end;

9 end;

3.8.3 Handling exceptions

Exceptions in background tasks are caught and re-raised in the WaitFor method. If you are using a synchronous version of Parallel task (without the NoWait modifier), then WaitFor is called at the end of the Execute method (in other words, Parallel.ParallelTask.Execute(...) will re-raise task exceptions). If, however, you are using the asynchronous version (by calling Parallel.Paralleltask.NoWait.Execute(...)), an exception will only be raised when you wait for the background tasks to complete by calling WaitFor.

For more details on handling ParallelTask exceptions, see the Handling exceptions section in the Join chapter.

3.8.4 Examples

Practical examples of Parallel Task usage can be found in chapters Background worker and list partitioning and Parallel data production.

3.9 Background worker

Background worker abstraction implements a client/server relationship. To create a Background worker abstraction, call Parallel.BackgroundWorker.

See also demo 52_BackgroundWorker.

Example:

Start the worker

1 FBackgroundWorker := Parallel.BackgroundWorker.NumTasks(2)

2 .Execute(

3 procedure (const workItem: IOmniWorkItem)

4 begin

5 workItem.Result := workItem.Data.AsInteger * 3;

6 end

7 )

8 .OnRequestDone(

9 procedure (const Sender: IOmniBackgroundWorker;

10 const workItem: IOmniWorkItem)

11 begin

12 lbLogBW.ItemIndex := lbLogBW.Items.Add(Format('%d * 3 = %d',

13 [workItem.Data.AsInteger, workItem.Result.AsInteger]));

14 end

15 );

Schedule a work item

1 FBackgroundWorker.Schedule(

2 FBackgroundWorker.CreateWorkItem(Random(100)));

Stop the worker

1 FBackgroundWorker.Terminate(INFINITE);

2 FBackgroundWorker := nil;

3.9.1 Basics

Background worker is designed around the concept of a work item. You create a worker which spawns one or more background threads and then schedule work items to it. When they are processed, the abstraction notifies you so you can process the result. Work items are queued so you can schedule many work items at once and background threads will then process them one by one.

Background worker is created by calling Parallel.BackgroundWorker factory. Usually you’ll also set the main work item processing method and completion method by calling Execute and OnRequestDone, respectively. As usual, you can provide OTL with a method, a procedure, or an anonymous method.

1 FWorker := Parallel.BackgroundWorker

2 .OnRequestDone(StringRequestDone)

3 .Execute(StringProcessorHL);

To close the background worker, call the Terminate method and set the reference (FWorker) to nil.

To create a work item, call the CreateWorkItem factory and pass the result to the Schedule method. You can pass any data to the work item by passing a parameter to the CreateWorkItem method. If you need to pass multiple parameters, you can collect them in a record and wrap it with a TOmniValue.FromRecord<T>, pass them as an array of TOmniValues or pass them as an object or an interface.

3.9.2 IOmniBackgroundWorker interface

1 type

2 TOmniTaskInitializerDelegate =

3 reference to procedure(var taskState: TOmniValue);

4 TOmniTaskFinalizerDelegate =

5 reference to procedure(const taskState: TOmniValue);

6

7 IOmniBackgroundWorker = interface

8 function CreateWorkItem(const data: TOmniValue): IOmniWorkItem;

9 procedure CancelAll; overload;

10 procedure CancelAll(upToUniqueID: int64); overload;

11 function Config: IOmniWorkItemConfig;

12 function Execute(const aTask: TOmniBackgroundWorkerDelegate = nil):

13 IOmniBackgroundWorker;

14 function Finalize(taskFinalizer:

15 TOmniTaskFinalizerDelegate): IOmniBackgroundWorker;

16 function Initialize(taskInitializer:

17 TOmniTaskInitializerDelegate): IOmniBackgroundWorker;

18 function NumTasks(numTasks: integer): IOmniBackgroundWorker;

19 function OnRequestDone(const aTask: TOmniWorkItemDoneDelegate):

20 IOmniBackgroundWorker;

21 function OnRequestDone_Asy(const aTask: TOmniWorkItemDoneDelegate):

22 IOmniBackgroundWorker;

23 function OnStop(stopCode: TProc): IOmniBackgroundWorker; overload;

24 function OnStop(stopCode: TOmniTaskStopDelegate): IOmniBackgroundWorker; overload;

25 function OnStopInvoke(stopCode: TProc): IOmniBackgroundWorker;

26 procedure Schedule(const workItem: IOmniWorkItem;

27 const workItemConfig: IOmniWorkItemConfig = nil);

28 function TaskConfig(const config: IOmniTaskConfig):

29 IOmniBackgroundWorker;

30 function Terminate(maxWait_ms: cardinal): boolean;

31 function WaitFor(maxWait_ms: cardinal): boolean;

32 end;

Background worker supports two notification mechanisms. By calling OnRequestDone, you are setting a synchronous handler which will be executed in the context of the thread that created the background worker (usually a main thread). In other words – if you call OnRequestDone, you don’t have to worry about thread synchronisation issues. On the other hand, OnRequestDone_Asy handler is executed asynchronously, in the context of the thread that processed the work item.

For performance reasons (for example when terminating the application), the code can prevent execution of event handlers for a work item by setting IOmniWorkItem.SkipCompletionHandler to True.

By calling NumTasks, you can set the degree of parallelism. By default, background worker uses only one background task but you can override this behaviour.

If NumTasks receives a positive parameter (> 0), the number of worker tasks is set to that number. For example, NumTasks(16) starts 16 worker tasks, even if that is more than the number of available cores.

If NumTasks receives a negative parameter (< 0), it specifies a number of cores that should be reserved for other use. The number of worker tasks is then set to <number of available cores> - <number of reserved cores>. If, for example, current process can use 16 cores and NumTasks(-4) is used, only 12 (16-4) worker tasks will be started.

Value 0 is not allowed and results in an exception.

The OnStop function can be used to set up a termination handler - a code that will get executed when all background tasks will have completed execution. This termination handler is called from one of the worker threads, not from the main thread! If you need to run a code in the main thread, use the task configuration block. To set up task configuration block, call the TaskConfig function.

Release [3.07.2] introduced method OnStopInvoke which works like OnStop except that the termination handler is automatically executed in the context of the owner thread via implicit Invoke. For example, see Parallel.ForEach.OnStopInvoke.

Calling Terminate will stop background workers. If they stop in maxWait_ms, True will be returned, False otherwise. WaitFor waits for workers to stop (without commanding them to stop beforehand so you would have to call Terminate before WaitFor) and returns True/False just as Terminate does.

3.9.3 Task initialization

Background worker implements a mechanism that can be used by worker tasks to initialize and destroy task-specific structures.

By calling Initialize, you can provide a task initializer which is executed once for each worker task before it begins processing work items. Similarly, by calling Finalize you provide the background worker with a task finalizer which is called just before the background task is destroyed.

Both initializer and finalizer will receive a taskState variable where you can store any task-specific data (for example, a class containing multiple task-specific structures). This task state is also available in the executor method through the property IOmniWorkItem.TaskState.

3.9.4 Work item configuration

You can pass additional configuration parameters to the Schedule method by providing a configuration block, which can be created by calling the Config method. By using this approach, you can set a custom executor method or a custom completion method for each separate work item.

1 type

2 IOmniWorkItemConfig = interface

3 function OnExecute(const aTask: TOmniBackgroundWorkerDelegate):

4 IOmniWorkItemConfig;

5 function OnRequestDone(const aTask: TOmniWorkItemDoneDelegate):

6 IOmniWorkItemConfig;

7 function OnRequestDone_Asy(const aTask: TOmniWorkItemDoneDelegate):

8 IOmniWorkItemConfig;

9 end;

3.9.5 Work item interface

CreateWorkItem method returns an IOmniWorkItem interface.

1 type

2 IOmniWorkItem = interface

3 function DetachException: Exception;

4 function FatalException: Exception;

5 function IsExceptional: boolean;

6 property CancellationToken: IOmniCancellationToken

7 read GetCancellationToken;

8 property Data: TOmniValue read GetData;

9 property Result: TOmniValue read GetResult write SetResult;

10 property SkipCompletionHandler: boolean read GetSkipCompletionHandler write

11 SetSkipCompletionHandler;

12 property Task: IOmniTask read GetTask;

13 property TaskState: TOmniValue read GetTaskState;

14 property UniqueID: int64 read GetUniqueID;

15 end;

It contains input data (Data property), result (Result property) and a unique ID, which is assigned in the CreateWorkItem call. First work item gets ID 1, second ID 2 and so on. This allows for some flexibility when you want to cancel work items. You can cancel one specific item by calling workItem.CancellationToken.Signal or multiple items by calling backgroundWorker.CancelAll(highestIDToBeCancelled) or all items by calling backgroundWorker.CancelAll.

Cancellation is partly automatic and partly cooperative. If the work item that is to be cancelled has not yet reached the execution, the system will prevent it from ever being executed. If, however, work item is already being processed, your code must occasionally check workItem.CancellationToken.IsSignalled and exit if that happens (provided that you want to support cancellation at all). Regardless of how the work item was cancelled, completion handler will still be called and it can check workItem.CancellationToken.IsSignalled to check whether the work item was cancelled prematurely or not.

Any uncaught exception will be stored in the FatalException property. You can detach (and take ownership of) the exception by calling the DetachException and you can test if there was an exception by calling IsExceptional. If IsExceptional returns True, any access to the Result property will raise exception stored in the FatalException property. [In other words – if an unhandled exception occurs in the executor code (in the background thread), it will propagate to the place where you access workItem.Result.]

Property Task provides access to the task executing the work item. Property TaskState returns the value initialized in the task initializer.

If SkipCompletionHandler is set to True when work item is created or during its execution, request handlers for that work item won’t be called.

If SkipCompletionHandler is set to True in the OnRequestDone_Asy handler, then OnRequestDone handler won’t be called.

3.9.6 Examples

Practical examples of Background Worker usage can be found in chapters Background worker and list partitioning and OmniThreadLibrary and databases.

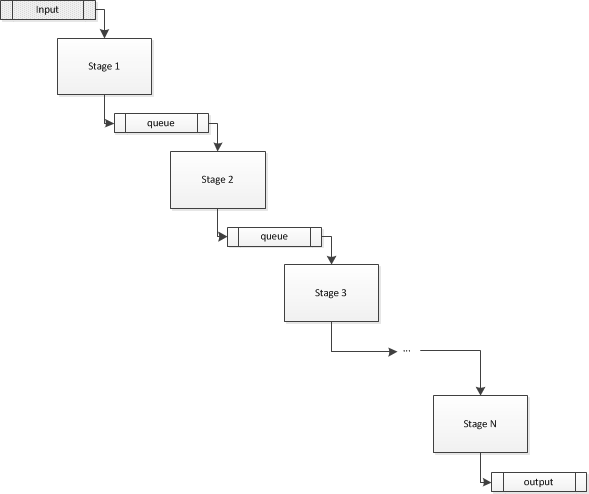

3.10 Pipeline

Pipeline abstraction encapsulates multistage processes in which the data processing can be split into multiple independent stages connected with data queues.

See also demo 41_Pipeline.

Example:

1 uses

2 OtlCommon,

3 OtlCollections,

4 OtlParallel;

5

6 var

7 sum: integer;

8

9 sum := Parallel.Pipeline

10 .Stage(

11 procedure (const input, output: IOmniBlockingCollection)

12 var

13 i: integer;

14 begin

15 for i := 1 to 1000000 do

16 output.Add(i);

17 end)

18 .Stage(

19 procedure (const input: TOmniValue; var output: TOmniValue)

20 begin

21 output := input.AsInteger * 3;

22 end)

23 .Stage(

24 procedure (const input, output: IOmniBlockingCollection)

25 var

26 sum: integer;

27 value: TOmniValue;

28 begin

29 sum := 0;

30 for value in input do

31 Inc(sum, value);

32 output.Add(sum);

33 end)

34 .Run.Output.Next;

This example creates a three-stage pipeline. First stage generates numbers from 1 to 1.000,000. Second stage multiplies each number by 3. Third stage adds all numbers together and writes the result to the output queue. This result is then stored in the variable sum.

3.10.1 Background

The Pipeline abstraction is appropriate for parallelizing processes that can be split into stages (subprocesses), connected with data queues. Data flows from the input queue into the first stage where it is partially processed and then emitted into an intermediary queue. The first stage then continues execution, processes more input data and outputs more output data. This continues until complete input is processed. The intermediary queue leads into the next stage which does the processing in a similar manner and so on and on. At the end, the data is output into a queue which can be then read and processed by the program that created this multistage process. As a whole, a multistage process acts as a pipeline – data comes in, data comes out (and a miracle occurs in-between ;)).

What is important here is that no stage shares state with any other stage. The only interaction between stages is done with the data passed through the intermediary queues. The quantity of data, however, doesn’t have to be constant. It is entirely possible for a stage to generate more or less data than it received on the input queue.

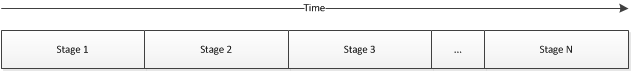

In a classical single-threaded program the execution plan for a multistage process is very simple.

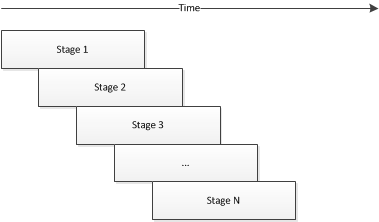

In a multi-threaded environment, however, we can do better than that. Because the stages are mostly independent, they can be executed in parallel.

3.10.2 Basics

A Pipeline is created by calling Parallel.Pipeline function which returns an IOmniPipeline interface. There are three overloaded versions of this function. The first creates an unconfigured pipeline. The second prepares one or more stages and optionally sets the input queue. The third prepares one or more stages with a different method signature.

1 TPipelineStageDelegate = reference to procedure (const input, output:

2 IOmniBlockingCollection);

3 TPipelineStageDelegateEx = reference to procedure (const input, output:

4 IOmniBlockingCollection; const task: IOmniTask);

5

6 class function Pipeline: IOmniPipeline; overload;

7 class function Pipeline(const stages: array of TPipelineStageDelegate;

8 const input: IOmniBlockingCollection = nil): IOmniPipeline; overload;

9 class function Pipeline(const stages: array of TPipelineStageDelegateEx;

10 const input: IOmniBlockingCollection = nil): IOmniPipeline; overload;

Stages are implemented as anonymous procedures, procedures or methods taking two queue parameters – one for input and one for output. Both queues are automatically created by the Pipeline implementation and passed to the stage delegate.

Pipeline also supports the concept of simple stages where stage method accepts a TOmniValue input and provides a TOmniValue output. In this case, OmniThreadLibrary provides the loop which reads data from the input queue, calls your stage code and writes data to the output queue.

1 TPipelineSimpleStageDelegate = reference to procedure(

2 const input: TOmniValue; var output: TOmniValue);

Simple stage can produce zero or one data element for each input. Only if the code assigns a value to the output parameter, the value will be written to the output queue.

3.10.3 IOmniPipeline interface

All Parallel.Pipeline overloads return the IOmniPipeline interface.

1 IOmniPipeline = interface

2 procedure Cancel;

3 function From(const queue: IOmniBlockingCollection): IOmniPipeline;

4 function HandleExceptions: IOmniPipeline;

5 function NoThrottling: IOmniPipeline;

6 function NumTasks(numTasks: integer): IOmniPipeline;

7 function OnStop(stopCode: TProc): IOmniPipeline; overload;

8 function OnStop(stopCode: TOmniTaskStopDelegate): IOmniPipeline; overload;

9 function OnStopInvoke(stopCode: TProc): IOmniPipeline;

10 function Run: IOmniPipeline;

11 function Stage(

12 pipelineStage: TPipelineSimpleStageDelegate;

13 taskConfig: IOmniTaskConfig = nil): IOmniPipeline; overload;

14 function Stage(

15 pipelineStage: TPipelineStageDelegate;

16 taskConfig: IOmniTaskConfig = nil): IOmniPipeline; overload;

17 function Stage(

18 pipelineStage: TPipelineStageDelegateEx;

19 taskConfig: IOmniTaskConfig = nil): IOmniPipeline; overload;

20 function Stages(

21 const pipelineStages: array of TPipelineSimpleStageDelegate;

22 taskConfig: IOmniTaskConfig = nil): IOmniPipeline; overload;

23 function Stages(

24 const pipelineStages: array of TPipelineStageDelegate;

25 taskConfig: IOmniTaskConfig = nil): IOmniPipeline; overload;

26 function Stages(

27 const pipelineStages: array of TPipelineStageDelegateEx;

28 taskConfig: IOmniTaskConfig = nil): IOmniPipeline; overload;

29 function Throttle(numEntries: integer; unblockAtCount: integer = 0):

30 IOmniPipeline;

31 function WaitFor(timeout_ms: cardinal): boolean;

32 property Input: IOmniBlockingCollection read GetInput;

33 property Output: IOmniBlockingCollection read GetOutput;

34 property PipelineStage[idxStage: integer]: IOmniPipelineStage read GetPipelineStage;

35 end;

Various Stage and Stages overloads can be used to define one or more stages and optionally configure them with a task configuration block.

The Run function does all the hard work. It creates queues and sets up OmniThreadLibrary tasks.

The OnStop function assigns a termination handler - a code that will be called when all pipeline stages stop working. This termination handler is called from one of the worker threads, not from the main thread! If you need to run a code in the main thread, use the task configuration block on the last stage.

Another possibility is to use another variation of OnStop that accepts a delegate with an IOmniTask parameter. You can then use task.Invoke to execute a code in the context of the main thread.

Release [3.07.2] introduced method OnStopInvoke which works like OnStop except that the termination handler is automatically executed in the context of the owner thread via implicit Invoke. For example, see Parallel.ForEach.OnStopInvoke.

The From function sets the input queue. It will be passed to the first stage in the input parameter. If your code does not call this function, OmniThreadLibrary will automatically create the input queue for you. Input queue can be accessed through the Input property.

The output queue is always created automatically. It can be accessed through the Output property. Even if the last stage doesn’t produce any result, this queue can be used to signal the end of computation. [When each stage ends, CompleteAdding is automatically called on the output queue. This allows the next stage to detect the end of input (blocking collection enumerator will exit or TryTake will return false). Same goes on for the output queue.]

The WaitFor function waits for up to timeout_ms milliseconds for the pipeline to finish the work. It returns True if all stages have processed the data before the time interval expires.

The NumTasks function sets the number of parallel execution tasks for the stage(s) just added with the Stage(s) function (IOW, call Stage followed by NumTasks to do that). If it is called before any stage is added, it will specify the default for all stages. Number of parallel execution tasks for a specific stage can then still be overridden by calling NumTasks after the Stage is called. [See the Parallel stages section below for more information.]

If NumTasks receives a positive parameter (> 0), the number of worker tasks is set to that number. For example, NumTasks(16) starts 16 worker tasks, even if that is more than the number of available cores.

If NumTasks receives a negative parameter (< 0), it specifies the number of cores that should be reserved for other use. The number of worker tasks is then set to <number of available cores> - <number of reserved cores>. If, for example, current process can use 16 cores and NumTasks(-4) is used, only 12 (16-4) worker tasks will be started.

Value 0 is not allowed and results in an exception.

The Throttle functions sets the throttling parameters for stage(s) just added with the Stage(s) function. Just as the NumTask it affects either the global defaults or just currently added stage(s). By default, throttling is set to 10,240 elements. [See the Throttling section below for more info.]

Up to version [3.07] it was not possible to fully disable the throttling mechanism. To fix this problem, version [3.07] introduced method NoThrottle which disables throttling on stage(s) just added with the Stage(s) function.

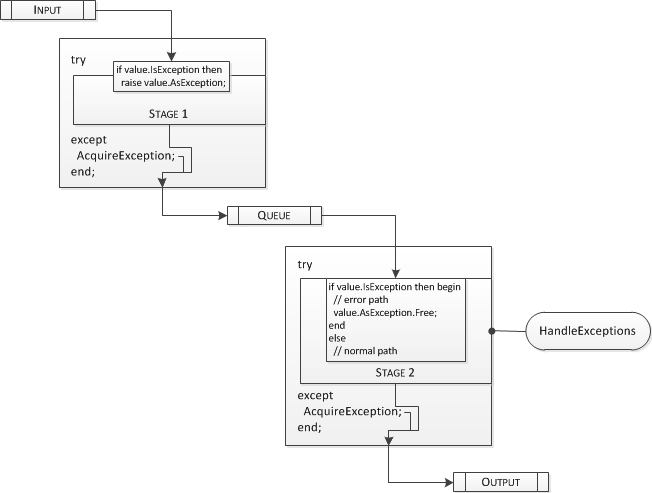

The HandleExceptions function changes the stage wrapper code so that it will pass exceptions from the input queue to the stage code. Just as the NumTask it affects either the global defaults or just currently added stage(s). [See the Exceptions section below for more info.]

The PipelineStage[] index property allows the program to access input and output pipeline of each stage. This allows the code to insert messages at any point in the pipeline.

1 IOmniPipelineStage = interface

2 property Input: IOmniBlockingCollection read GetInput;

3 property Output: IOmniBlockingCollection read GetOutput;

4 end;

3.10.3.1 Example

An example will help explain all this.

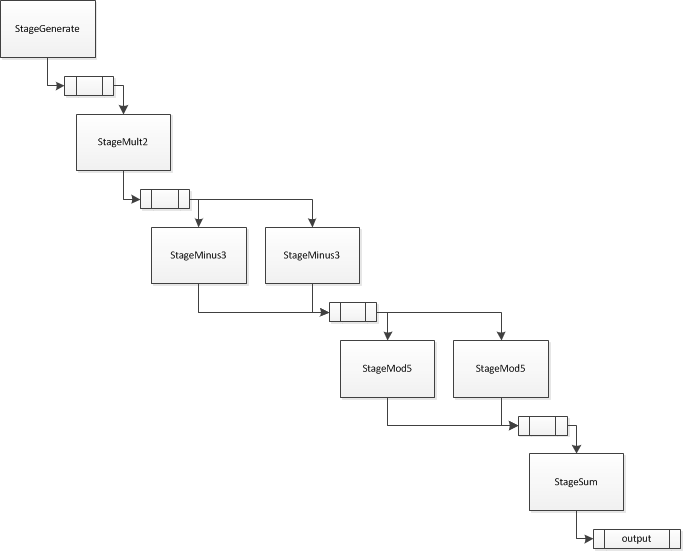

1 Parallel.Pipeline

2 .Throttle(1000)

3 .Stage(StageGenerate)

4 .Stage(StageMult2)

5 .Stages([StageMinus3, StageMod5])

6 .NumTasks(2)

7 .Stage(StageSum)

8 .Run;

First, a global throttling parameter is set. It will be applied to all stages. Two stages are then added, each with a separate call to the Stage function.

Another two stages are then added with one call. They are both set to execute in two parallel tasks. At the end another stage is added and the whole setup is executed.

The complete process will use seven tasks (one for StageGenerate, one for StageMult2, two for StageMinus3, two for StageMod5 and one for StageSum).

3.10.4 Generators, mutators, and aggregators

Let’s look at three different examples of multiprocessing stages.

A stage may accept no input and just generate an output. This will only happen in the first stage. (A middle stage accepting no input would render the whole pipeline rather useless.)

1 procedure StageGenerate(const input, output: IOmniBlockingCollection);

2 var

3 i: integer;

4 begin

5 for i := 1 to 1000000 do

6 output.Add(i);

7 end;

A stage may also read data from the input and generate the output. Zero, one or more elements could be generated for each input.

1 procedure StageMult2(const input, output: IOmniBlockingCollection);

2 var

3 value: TOmniValue;

4 begin

5 for value in input do

6 output.Add(2 * value.AsInteger);

7 end;

The last example is a stage that reads data from the input, performs some operation on it and returns the aggregation of this data.

1 procedure StageSum(const input, output: IOmniBlockingCollection);

2 var

3 sum : integer;

4 value: TOmniValue;

5 begin

6 sum := 0;

7 for value in input do

8 Inc(sum, value);

9 output.Add(sum);

10 end;

All examples are just special cases of the general principle. Pipeline doesn’t enforce any correlation between the amount of input data and the amount of output data. There’s also absolutely no requirement that data must be all numbers. Feel free to pass around anything that can be contained in a TOmniValue.

3.10.5 Throttling

In some cases, large amount of data may be passed through the multistage process. If one stage is suspended for some time – or if it performs a calculation that is slower than the calculation in the previous stage – this stage’s input queue may fill up with data which can cause lots of memory to be allocated and later released. To even out the data flow, Pipeline uses throttling.

Throttling sets the maximum size of the blocking collection (in TOmniValue units). When the specified quantity of data items is stored in the collection, no more data can be added. The Add function will simply block until the collection is empty enough or CompleteAdding has been called. Collection is deemed to be empty enough when the data count drops below some value which can be either passed as a second parameter to the Throttle function or is calculated as a 3/4 of the maximum size limit if the second parameter is not provided.

3.10.6 Parallel stages